- TinyCheque's Newsletter

- Posts

- Everything about RAG and it's future

Everything about RAG and it's future

The AI secret you did not know about..

Ever wondered how AI can access vast amounts of information without becoming a bloated mess?

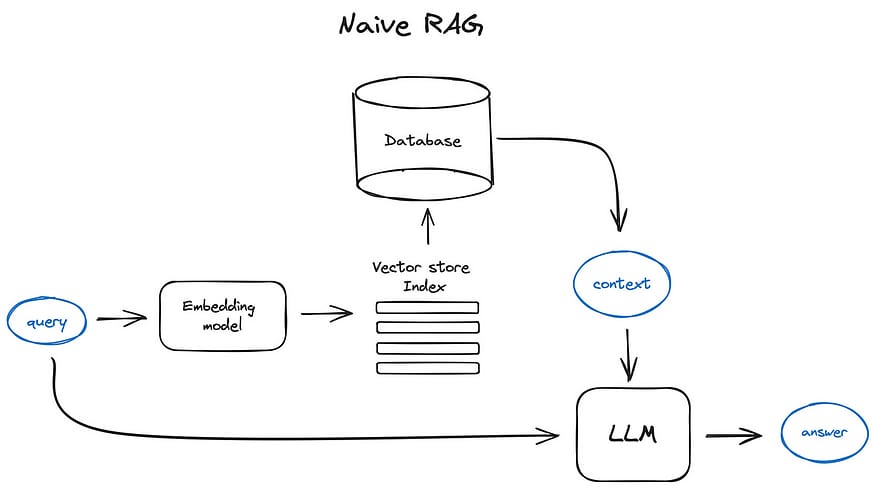

Enter RAG - Retrieval Augmented Generation. It's not just another acronym;

it's revolutionizing how AI thinks and responds.

Here's my take on RAG and why it's crucial 👇

The RAG Revolution Remember when chatbots were as useful as a chocolate teapot?

Those days are gone.

RAG is the secret sauce making AI actually... intelligent.

It's like giving AI a turbocharged memory

Combines the power of language models with external knowledge

Results? More accurate, up-to-date, and context-aware responses

But here's the kicker..

RAG isn't just improving AI - it's changing the game entirely.

However, RAG might not be here for too long as Claude has been working it’s ways to not only reduce the cost but optimize the speed and hence on 14th August they introduced Prompt Caching.

First, let’s understand the difference…

RAG vs. Prompt Caching: The Showdown Now, you might be thinking, "What about Prompt Caching? Isn't that the next big thing?"

Well, let's break it down:

RAG | Prompt Caching |

Dynamically retrieves relevant info | Stores pre-computed responses |

Adapts to new data effortlessly | Lightning-fast for repetitive queries |

Ideal for complex, evolving knowledge bases | Perfect for static, frequently asked questions |

The verdict? They're not rivals; they're dance partners. Use RAG when you need flexibility, Prompt Caching when speed is king.

The Future of RAG: It's Mind-Blowing Hold onto your hats, because the future of RAG is wilder than a rollercoaster ride through a thunderstorm.

Here's what's coming:

Hyper-personalized AI assistants

Real-time knowledge integration

Multi-modal RAG (text, images, video - the whole shebang)

But wait, there's more! Custom LLMs are entering the chat, and they're bringing RAG to the party.

Custom LLMs + RAG = AI Supernova Imagine an AI tailored to your industry, your company, your brain. That's what custom LLMs bring to the table. Now, mix that with RAG, and you've got an AI that's not just smart - it's scary smart.

Here's how it works:

Build a custom LLM trained on your specific domain

Integrate it with RAG for real-time knowledge retrieval

Result? An AI that thinks like you, but faster and with perfect recall

The Open Source Buffet Want to get your hands dirty with RAG? Here's a smorgasbord of open-source goodness:

LangChain: The Swiss Army knife of RAG

Haystack: For when you need industrial-strength RAG

GPT Index: Because sometimes, you just need to index everything

FAISS: Facebook's gift to the RAG world

Sentence Transformers: For when you need to understand... well, sentences

Learning: The future belongs to those who can harness the power of RAG and custom LLMs. It's not just about having a big brain; it's about knowing how to use it.

The Breakthrough Remember when AI was just a glorified search engine? Those days are gone. With RAG and custom LLMs, we're entering an era where AI doesn't just retrieve information - it understands and reasons with it.

But here's the million-dollar question: Are you ready to ride this wave?

Because let me tell you, the companies that master RAG and custom LLMs aren't just going to survive - they're going to thrive.

So, what's your RAG strategy?

Hit reply and let me know - I read every email, and I'm dying to hear your thoughts on this AI revolution.

See you tomorrow, when we'll dive into another mind-bending aspect of the AI world!

Keep innovating 🚀

Best Regards,

Udit Goenka

Founder & CEO

TinyCheque